Apache Hadoop Pseudo-distributed mode installation helps you to simulate a multi node installation on a single node. Instead of installing hadoop on different servers, you can simulate it on a single server.

Before you continue, make sure you understand the hadoop fundamentals, and have tested the standalone hadoop installation.

If you’ve already completed the 1st three steps mentioned below as part of the standlone hadoop installation, jump to step 4.

1. Create a Hadoop User

You can download and install hadoop on root. But, it is recommended to install it as a separate user. So, login to root and create a user called hadoop.

# adduser hadoop # passwd hadoop

2. Download Hadoop Common

Download the Apache Hadoop Common and move it to the server where you want to install it.

You can also use wget to download it directly to your server using wget.

# su - hadoop $ wget http://mirror.nyi.net/apache//hadoop/common/stable/hadoop-0.20.203.0rc1.tar.gz

Make sure Java 1.6 is installed on your system.

$ java -version java version "1.6.0_20" OpenJDK Runtime Environment (IcedTea6 1.9.7) (rhel-1.39.1.9.7.el6-x86_64) OpenJDK 64-Bit Server VM (build 19.0-b09, mixed mode)

3. Unpack under hadoop User

As hadoop user, unpack this package.

$ tar xvfz hadoop-0.20.203.0rc1.tar.gz

This will create the “hadoop-0.20.204.0” directory.

$ ls -l hadoop-0.20.204.0 total 6780 drwxr-xr-x. 2 hadoop hadoop 4096 Oct 12 08:50 bin -rw-rw-r--. 1 hadoop hadoop 110797 Aug 25 16:28 build.xml drwxr-xr-x. 4 hadoop hadoop 4096 Aug 25 16:38 c++ -rw-rw-r--. 1 hadoop hadoop 419532 Aug 25 16:28 CHANGES.txt drwxr-xr-x. 2 hadoop hadoop 4096 Nov 2 05:29 conf drwxr-xr-x. 14 hadoop hadoop 4096 Aug 25 16:28 contrib drwxr-xr-x. 7 hadoop hadoop 4096 Oct 12 08:49 docs drwxr-xr-x. 3 hadoop hadoop 4096 Aug 25 16:29 etc

Modify the hadoop-0.20.204.0/conf/hadoop-env.sh file and make sure JAVA_HOME environment variable is pointing to the correct location of the java that is installed on your system.

$ grep JAVA ~/hadoop-0.20.204.0/conf/hadoop-env.sh export JAVA_HOME=/usr/java/jdk1.6.0_27

After this step, hadoop will be installed under /home/hadoop/hadoop-0.20.204.0 directory.

4. Modify Hadoop Configuration Files

Add the <configuration> section shown below to the core-site.xml file. This indicates the HDFS default location and the port.

$ cat ~/hadoop-0.20.204.0/conf/core-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

Add the <configuration> section shown below to the hdfs-site.xml file.

$ cat ~/hadoop-0.20.204.0/conf/hdfs-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>

Add the <configuration> section shown below to the mapred-site.xml file. This indicates that the job tracker uses 9001 as the port.

$ cat ~/hadoop-0.20.204.0/conf/mapred-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>localhost:9001</value>

</property>

</configuration>

5. Setup passwordless ssh to localhost

In a typical Hadoop production environment you’ll be setting up this passwordless ssh access between the different servers. Since we are simulating a distributed environment on a single server, we need to setup the passwordless ssh access to the localhost itself.

Use ssh-keygen to generate the private and public key value pair.

$ ssh-keygen Generating public/private rsa key pair. Enter file in which to save the key (/home/hadoop/.ssh/id_rsa): Enter passphrase (empty for no passphrase): Enter same passphrase again: Your identification has been saved in /home/hadoop/.ssh/id_rsa. Your public key has been saved in /home/hadoop/.ssh/id_rsa.pub. The key fingerprint is: 02:5a:19:ab:1e:g2:1a:11:bb:22:30:6d:12:38:a9:b1 hadoop@hadoop The key's randomart image is: +--[ RSA 2048]----+ |oo | |o + . . | | + + o o | |o .o = . | | . += S | |. o.o+. | |. ..o. | | . E .. | | . .. | +-----------------+

Add the public key to the authorized_keys. Just use the ssh-copy-id command, which will take care of this step automatically and assign appropriate permissions to these files.

$ ssh-copy-id -i ~/.ssh/id_rsa.pub localhost hadoop@localhost's password: Now try logging into the machine, with "ssh 'localhost'", and check in: .ssh/authorized_keys to make sure we haven't added extra keys that you weren't expecting.

Test the passwordless login to the localhost as shown below.

$ ssh localhost Last login: Sat Jan 14 23:01:59 2012 from localhost

For more details on this, read 3 Steps to Perform SSH Login Without Password Using ssh-keygen & ssh-copy-id

6. Format Hadoop NameNode

Format the namenode using the hadoop command as shown below. You’ll see the message “Storage directory /tmp/hadoop-hadoop/dfs/name has been successfully formatted” if this command works properly.

$ cd ~/hadoop-0.20.204.0 $ bin/hadoop namenode -format 12/01/14 23:02:27 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: host = hadoop/127.0.0.1 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 0.20.204.0 STARTUP_MSG: build = git://hrt8n35.cc1.ygridcore.net/ on branch branch-0.20-security-204 -r 65e258bf0813ac2b15bb4c954660eaf9e8fba141; compiled by 'hortonow' on Thu Aug 25 23:35:31 UTC 2011 ************************************************************/ 12/01/14 23:02:27 INFO util.GSet: VM type = 64-bit 12/01/14 23:02:27 INFO util.GSet: 2% max memory = 17.77875 MB 12/01/14 23:02:27 INFO util.GSet: capacity = 2^21 = 2097152 entries 12/01/14 23:02:27 INFO util.GSet: recommended=2097152, actual=2097152 12/01/14 23:02:27 INFO namenode.FSNamesystem: fsOwner=hadoop 12/01/14 23:02:27 INFO namenode.FSNamesystem: supergroup=supergroup 12/01/14 23:02:27 INFO namenode.FSNamesystem: isPermissionEnabled=true 12/01/14 23:02:27 INFO namenode.FSNamesystem: dfs.block.invalidate.limit=100 12/01/14 23:02:27 INFO namenode.FSNamesystem: isAccessTokenEnabled=false accessKeyUpdateInterval=0 min(s), accessTokenLifetime=0 min(s) 12/01/14 23:02:27 INFO namenode.NameNode: Caching file names occuring more than 10 times 12/01/14 23:02:27 INFO common.Storage: Image file of size 112 saved in 0 seconds. 12/01/14 23:02:27 INFO common.Storage: Storage directory /tmp/hadoop-hadoop/dfs/name has been successfully formatted. 12/01/14 23:02:27 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at hadoop/127.0.0.1 ************************************************************/

7. Start All Hadoop Related Services

Use the ~/hadoop-0.20.204.0/bin/start-all.sh script to start all hadoop related services. This will start the namenode, datanode, secondary namenode, jobtracker, tasktracker, etc.

$ bin/start-all.sh starting namenode, logging to /home/hadoop/hadoop-0.20.204.0/libexec/../logs/hadoop-hadoop-namenode-hadoop.out localhost: starting datanode, logging to /home/hadoop/hadoop-0.20.204.0/libexec/../logs/hadoop-hadoop-datanode-hadoop.out localhost: starting secondarynamenode, logging to /home/hadoop/hadoop-0.20.204.0/libexec/../logs/hadoop-hadoop-secondarynamenode-hadoop.out starting jobtracker, logging to /home/hadoop/hadoop-0.20.204.0/libexec/../logs/hadoop-hadoop-jobtracker-hadoop.out localhost: starting tasktracker, logging to /home/hadoop/hadoop-0.20.204.0/libexec/../logs/hadoop-hadoop-tasktracker-hadoop.out

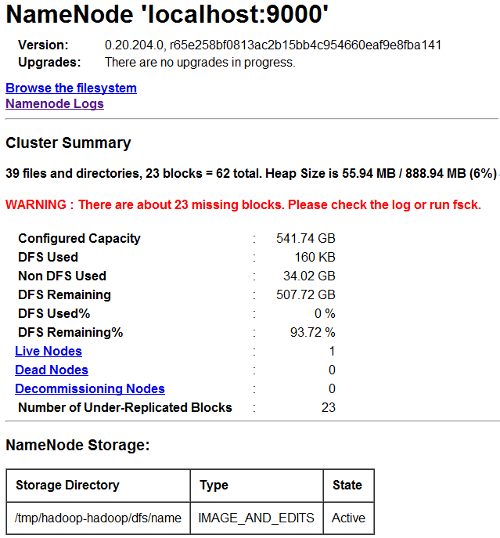

8. Browse NameNode and JobTracker Web GUI

Once all the Hadoop processes are started, you can view the health and status of the HDFS from a web interface. Use http://{your-hadoop-server-ip}:50070/dfshealth.jsp

For example, if you’ve installed hadoop on a server with ip-address 192.168.1.10, then use http://192.168.1.10:50070/dfshealth.jsp to view the NameNode GUI

This will display the following information:

Basic NameNode information:

- This will show when the Namenode was started, the hadoop version number, whether any upgrades are currently in progress or not.

- This also has link “Browse the filesystem”, which will let browse the content of HDFS filesystem from browser

- Click on “Namenode Logs” to view the logs

Cluster Summary displays the following information:

- Total number of files and directories managed by the HDFS

- Any warning message (for example: missing blocks as shown in the image below)

- Total HDFS file system size

- Both HDFS %age-used and size-used

- Total number of nodes in this distributed system

NameNode storage information: This displays the storage directory of the HDFS file system, the filesystem type, and the state (Active or not)

To access the JobTracker web interface, use http://{your-hadoop-server-ip}:50090

For example, if you’ve installed hadoop on a server with ip-address 192.168.1.10, then use http://192.168.102.20:50090/ to view the JobTracker GUI

As shown by the netstat command below, you can see both these ports are getting used.

$ netstat -a | grep 500 tcp 0 0 *:50090 *:* LISTEN tcp 0 0 *:50070 *:* LISTEN tcp 0 0 hadoop.thegeekstuff.com:50090 ::ffff:192.168.1.98:55923 ESTABLISHED

9. Test Sample Hadoop Program

This example program is provided as part of the hadoop, and it is shown in the hadoop document as an simple example to see whether this setup work.

For testing purpose, add some sample data files to the input directory. Let us just copy all the xml file from the conf directory to the input directory. So, these xml file will be considered as the data file for the example program. In the standalone version, you used the standard cp command to copy it to the input directory.

However in a distributed Hadoop setup, you’ll be using -put option of the hadoop command to add files to the HDFS file system. Keep in mind that you are not adding the files to a Linux filesystem, you are adding the input files to the Hadoop Distributed file system. So, you use use the hadoop command to do this.

$ cd ~/hadoop-0.20.204.0 $ bin/hadoop fs -put conf input

Execute the sample hadoop test program. This is a simple hadoop program that simulates a grep. This searches for the reg-ex pattern “dfs[a-z.]+” in all the input/*.xml files (that is stored in the HDFS) and stores the output in the output directory that will be stored in the HDFS.

$ bin/hadoop jar hadoop-examples-*.jar grep input output 'dfs[a-z.]+'

When everything is setup properly, the above sample hadoop test program will display the following messages on the screen when it is executing it.

$ bin/hadoop jar hadoop-examples-*.jar grep input output 'dfs[a-z.]+' 12/01/14 23:45:02 INFO mapred.FileInputFormat: Total input paths to process : 18 12/01/14 23:45:02 INFO mapred.JobClient: Running job: job_201111020543_0001 12/01/14 23:45:03 INFO mapred.JobClient: map 0% reduce 0% 12/01/14 23:45:18 INFO mapred.JobClient: map 11% reduce 0% 12/01/14 23:45:24 INFO mapred.JobClient: map 22% reduce 0% 12/01/14 23:45:27 INFO mapred.JobClient: map 22% reduce 3% 12/01/14 23:45:30 INFO mapred.JobClient: map 33% reduce 3% 12/01/14 23:45:36 INFO mapred.JobClient: map 44% reduce 7% 12/01/14 23:45:42 INFO mapred.JobClient: map 55% reduce 14% 12/01/14 23:45:48 INFO mapred.JobClient: map 66% reduce 14% 12/01/14 23:45:51 INFO mapred.JobClient: map 66% reduce 18% 12/01/14 23:45:54 INFO mapred.JobClient: map 77% reduce 18% 12/01/14 23:45:57 INFO mapred.JobClient: map 77% reduce 22% 12/01/14 23:46:00 INFO mapred.JobClient: map 88% reduce 22% 12/01/14 23:46:06 INFO mapred.JobClient: map 100% reduce 25% 12/01/14 23:46:15 INFO mapred.JobClient: map 100% reduce 100% 12/01/14 23:46:20 INFO mapred.JobClient: Job complete: job_201111020543_0001 ...

The above command will create the output directory (in HDFS) with the results as shown below. To view this output directory, you should use “-get” option in the hadoop command as shown below.

$ bin/hadoop fs -get output output $ ls -l output total 4 -rwxrwxrwx. 1 root root 11 Aug 23 08:39 part-00000 -rwxrwxrwx. 1 root root 0 Aug 23 08:39 _SUCCESS $ cat output/* 1 dfsadmin

10. Troubleshooting Hadoop Issues

Issue 1: “Temporary failure in name resolution”

While executing the sample hadoop program, you might get the following error message.

12/01/14 07:34:57 INFO mapred.JobClient: Cleaning up the staging area file:/tmp/hadoop-root/mapred/staging/root-1040516815/.staging/job_local_0001

java.net.UnknownHostException: hadoop: hadoop: Temporary failure in name resolution

at java.net.InetAddress.getLocalHost(InetAddress.java:1438)

at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:815)

at org.apache.hadoop.mapred.JobClient$2.run(JobClient.java:791)

at java.security.AccessController.doPrivileged(Native Method)

Solution 1: Add the following entry to the /etc/hosts file that contains the ip-address, FQDN fully qualified domain name, and host name.

192.168.1.10 hadoop.thegeekstuff.com hadoop

Issue 2: “localhost: Error: JAVA_HOME is not set”

While executing hadoop start-all.sh, you might get this error as shown below.

$ bin/start-all.sh starting namenode, logging to /home/hadoop/hadoop-0.20.204.0/libexec/../logs/hadoop-hadoop-namenode-hadoop.out localhost: starting datanode, logging to /home/hadoop/hadoop-0.20.204.0/libexec/../logs/hadoop-hadoop-datanode-hadoop.out localhost: Error: JAVA_HOME is not set. localhost: starting secondarynamenode, logging to /home/hadoop/hadoop-0.20.204.0/libexec/../logs/hadoop-hadoop-secondarynamenode-hadoop.out localhost: Error: JAVA_HOME is not set. starting jobtracker, logging to /home/hadoop/hadoop-0.20.204.0/libexec/../logs/hadoop-hadoop-jobtracker-hadoop.out localhost: starting tasktracker, logging to /home/hadoop/hadoop-0.20.204.0/libexec/../logs/hadoop-hadoop-tasktracker-hadoop.out localhost: Error: JAVA_HOME is not set.

Solution 2: Make sure JAVA_HOME is setup properly in the conf/hadoop-env.sh as shown below.

$ grep JAVA_HOME conf/hadoop-env.sh export JAVA_HOME=/usr/java/jdk1.6.0_27

Issue 3: Error while executing “bin/hadoop fs -put conf input”

You might get one of the following error messages (including put: org.apache.hadoop.security.AccessControlException: Permission denied:) while executing the hadoop fs put command as shown below.

$ bin/hadoop fs -put conf input 12/01/14 23:21:53 INFO ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 7 time(s). 12/01/14 23:21:54 INFO ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 8 time(s). 12/01/14 23:21:55 INFO ipc.Client: Retrying connect to server: localhost/127.0.0.1:9000. Already tried 9 time(s). Bad connection to FS. command aborted. exception: Call to localhost/127.0.0.1:9000 failed on connection exception: java.net.ConnectException: Connection refused $ bin/hadoop fs -put conf input put: org.apache.hadoop.security.AccessControlException: Permission denied: user=hadoop, access=WRITE, inode="":root:supergroup:rwxr-xr-x $ ls -l input

Solution 3: Make sure /etc/hosts file is setup properly. Also, if your HDFS filesystem is not created properly, you might have issues during “hadoop fs -put”. Format your HDFS using “bin/hadoop namenode -format” and confirm that this displays “successfully formatted” message.

Issue 4: While executing start-all.sh (or start-dfs.sh), you might get this error message: “localhost: Unrecognized option: -jvm localhost: Could not create the Java virtual machine.”

Solution 4: This might happens if you’ve installed hadoop as root and trying to start the process. This is know bug, that is fixed according to this bug report. But, if you hit this bug, try installing hadoop as a non-root account (just like how we’ve explained in this article), which should fix this issue.

My name is Ramesh Natarajan. I will be posting instruction guides, how-to, troubleshooting tips and tricks on Linux, database, hardware, security and web. My focus is to write articles that will either teach you or help you resolve a problem. Read more about

My name is Ramesh Natarajan. I will be posting instruction guides, how-to, troubleshooting tips and tricks on Linux, database, hardware, security and web. My focus is to write articles that will either teach you or help you resolve a problem. Read more about

Comments on this entry are closed.

good~

thank you for share it~

And expect your next hadoop article…

Nice walk thorugh!!

Thanks

Good article!

Thanks for your writing.

I hope I see your next articles as soon as possible!

Very Good article. Keep it up.

The passwordless ssh did not work for me. Keep getting asked for password – but had specified blank. Keep getting authentication failure.

Wonderful step-by-step explanation.

Became a fan of this website.

Ramesh,

Do we have an article here, explaining setting up a fully-distributed hadoop cluster

my all nodes are started .. but i got this error. plz help me how to resolve this problem

bhargav@ubuntu:~$ jps

4391 SecondaryNameNode

3071 NameNode

4433 Jps

3650 JobTracker

3307 DataNode

3891 TaskTracker

bhargav@ubuntu:~$ hadoop fs -ls

Warning: $HADOOP_HOME is deprecated.

Bad connection to FS. command aborted. exception: No FileSystem for scheme: http

bhargav@ubuntu:~$

To bhargav: if you haven’t figured this out yet, I bet you specified “http:” as the protocol for the fds.default.name property in core-site.xml. I made the same mistake. The error message is telling you that there is nothing responding on port 9000 in http. The protocol should be “hdfs:”, not “http:”.

I am having my hadoop stuck at 0% 0% and having an error as failed to get system directory

I get random error as jobtracker not running even when i can see my job tracker running in JPS ,how can i troubleshoot all these?

This is really a great Hadoop installation tutorial. Thanks a lot!

Hi,

I am Having the following error –

Incorrect configuration: namenode address dfs.namenode.servicerpc-address or dfs.namenode.rpc-address is not configured.

Hi,

First of all, thanks for sharing this article, it really helped a lot. But, there is still something which I think is missing. I followed the steps and installed the pseudo cluster on my machine and everything worked fine. I can see all the components running. but, it is not opening in the browser, even though I have disabled SELinux, still I am getting tcp connection refused error.

I would really appreciate If someone could help me out.

Thanks in advance 🙂

Hello Abhishek,

Do you stop the iptable before accessing the UI ?

Sincerely

Bo.